We Should Take AI Generated Responses With A Grain Of Salt

Artificial intelligence (AI) has become a popular topic of discussion both in and out of the tech world, with the recent release of GPT-4, which claims to be able to explain memes1 and produce results that are indistinguishable from human writing. Companies and individuals have already started taking advantage of AI tools such as ChatGPT and other generative AI tools to automate customer support, write articles and complete assignments, all with impressive results.

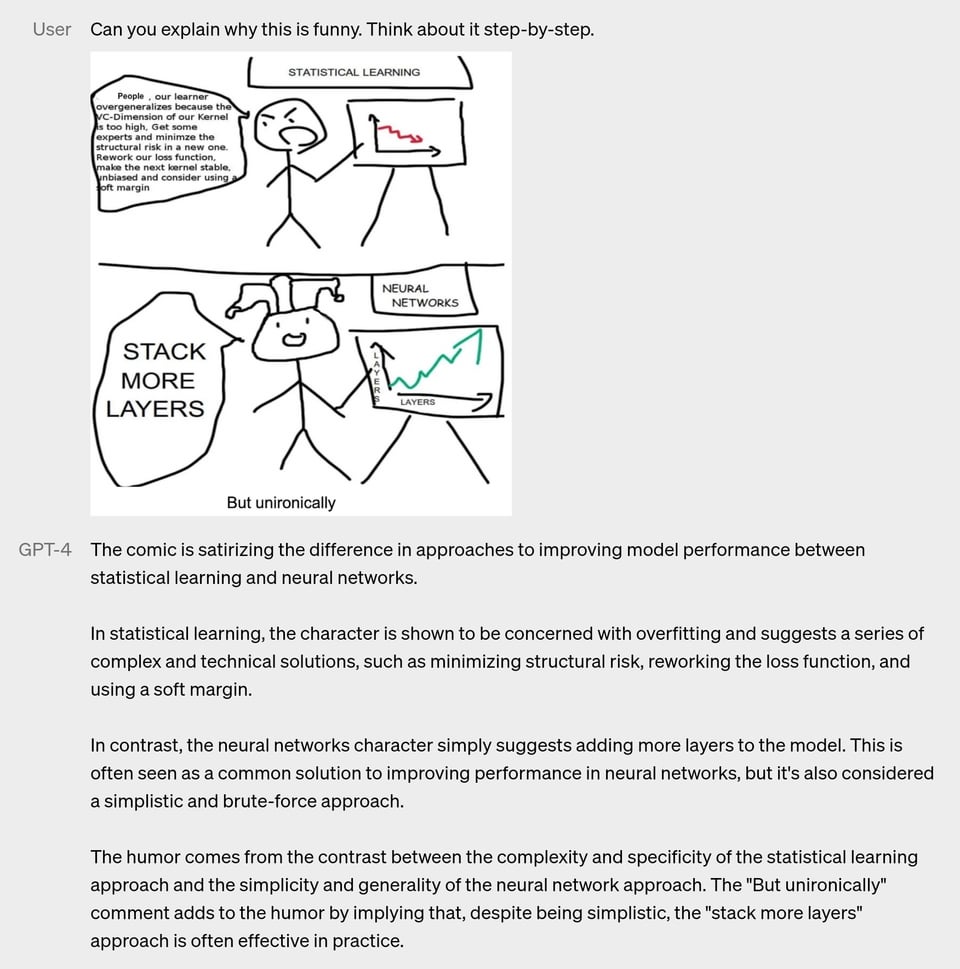

ChatGPT explaining memes - Image from Reddit

However, it is important to be cautious about AI’s limitations. It is still a very young technology, and it is still very far from being perfect.

Despite the fact that AI-generated art has won competitions, and AI can write novels that are indistinguishable from human writing, the results of AI are not truly original. They are simply a reflection of the large datasets that AI models have been trained on. While generative AI models are touted as being able to generate unexpected outputs while taking inspiration from existing data, they are ultimately producing results that are a combination of the data they have been trained on.

Moreover, the authenticity of the data that AI has been trained on is difficult to verify, and the datasets are often biased. For example, GPT-3 was trained on a range of data such as Common Crawl, web texts, books and Wikipedia2. These sources of information do not always provide sufficient information to the user to make decisions, as the decision has already been made by the AI model. The absence of attribution to the original source of the data also raises questions about the trustworthiness of AI-generated results.

AI-generated results are essentially guesses based on the data the AI model has been trained on. Therefore, it is not wise to blindly trust the guesses of a machine that has been trained on data that cannot be authenticated. It is essential to be cautious about the use of AI and to treat it as a tool to aid decision-making rather than a substitute for it. AI-generated results should be fact-checked and verified, and AI should not be used as the sole tool, especially for researching topics that one has no knowledge about, as it could provide false information with confidence.

AI-generated image for “an image of a person using a computer with an AI powered assistant or tool”

While AI can provide impressive results, it is important to be cautious about its limitations. As an example of the limitations of AI-generated art, it was discovered that the winning entry in the “digital arts/digitally-manipulated photography” category of the Colorado State Fair Fine Arts Competition had actually been retouched by the user before submitting it3. This underscores the importance of recognizing the role of human creativity in generating AI art, and not solely attributing its success to the technology itself.

AI-generated results are not truly original but rather a reflection of the data the AI model has been trained on. Therefore, it is essential to treat AI as a tool to aid decision-making, not as a substitute for it, and to fact-check and verify its results to ensure their accuracy.

AI can boost our analytic and decision-making abilities and heighten creativity.

This article was written with AI assistance to reword the original draft.

Footnotes

[1] GPT-4 can understand memes

[2] GPT-3 is based on a Transformer architecture that has been improved in 3 steps

[3] AI art winner at Colorado State Fair was retouched by human